7 Reporting and Results

Chapter 7 of the Dynamic Learning Maps® (DLM®) Alternate Assessment System 2015–2016 Technical Manual—Science (Dynamic Learning Maps Consortium, 2017) describes assessment results for the 2015–2016 academic year, including student participation and performance summaries and an overview of data files and score reports delivered to state education agencies.

This chapter presents spring 2023 student participation data; the percentage of students achieving at each performance level; and subgroup performance by gender, race, ethnicity, and English learner status. This chapter also reports the distribution of students by the highest linkage level mastered during spring 2023.

For a complete description of score reports and interpretive guides, see Chapter 7 of the 2015–2016 Technical Manual—Science (Dynamic Learning Maps Consortium, 2017).

7.1 Student Participation

During spring 2023, assessments were administered to 41,822 students in twenty states. Counts of students tested in each state are displayed in Table 7.1. The assessments were administered by 17,266 educators in 10,613 schools and 4,097 school districts. A total of 367,284 test sessions were administered during the spring assessment window. One test session is one testlet taken by one student. Only test sessions that were complete at the close of the spring assessment window counted toward the total sessions.

| State | Students (n) |

|---|---|

| Alaska | 205 |

| Arkansas | 2,526 |

| Delaware | 302 |

| District of Columbia | 195 |

| Illinois | 4,393 |

| Iowa | 880 |

| Kansas | 947 |

| Maryland | 2,288 |

| Missouri | 2,376 |

| New Hampshire | 297 |

| New Jersey | 4,565 |

| New Mexico | 905 |

| New York | 5,276 |

| North Dakota | 254 |

| Oklahoma | 2,093 |

| Pennsylvania | 7,107 |

| Rhode Island | 403 |

| Utah | 3,632 |

| West Virginia | 622 |

| Wisconsin | 2,556 |

Table 7.2 summarizes the number of students assessed in each grade and course. More than 11,930 students participated in each of the elementary and the middle school grade bands. In an effort to increase science instruction beyond the tested grades, several states promoted participation in the science assessment at all grade levels (i.e., did not restrict participation to the grade levels required for accountability purposes). In high school, almost 15,000 students participated. The differences in high school grade-level participation can be traced to differing state-level policies about the grade(s) in which students are assessed.

| Grade | Students (n) |

|---|---|

| 3 | 451 |

| 4 | 3,979 |

| 5 | 7,508 |

| 6 | 894 |

| 7 | 946 |

| 8 | 13,101 |

| 9 | 3,919 |

| 10 | 1,827 |

| 11 | 8,060 |

| 12 | 103 |

| Biology | 1,034 |

Table 7.3 summarizes the demographic characteristics of the students who participated in the spring 2023 administration. The majority of participants were male (67%), White (59%), and non-Hispanic (79%). About 6% of students were monitored or eligible for English learning services.

| Subgroup | n | % |

|---|---|---|

| Gender | ||

| Male | 27,949 | 66.8 |

| Female | 13,841 | 33.1 |

| Nonbinary/undesignated | 32 | 0.1 |

| Race | ||

| White | 24,754 | 59.2 |

| African American | 8,502 | 20.3 |

| Two or more races | 5,243 | 12.5 |

| Asian | 2,022 | 4.8 |

| American Indian | 962 | 2.3 |

| Native Hawaiian or Pacific Islander | 247 | 0.6 |

| Alaska Native | 92 | 0.2 |

| Hispanic ethnicity | ||

| Non-Hispanic | 33,027 | 79.0 |

| Hispanic | 8,795 | 21.0 |

| English learning (EL) participation | ||

| Not EL eligible or monitored | 39,352 | 94.1 |

| EL eligible or monitored | 2,470 | 5.9 |

In addition to the spring assessment window, instructionally embedded science assessments are also made available for educators to optionally administer to students during the year. Results from the instructionally embedded assessments do not contribute to final summative scoring but can be used to guide instructional decision-making. Table 7.4 summarizes the number of students who completed at least one instructionally embedded assessment by state. State education agencies are allowed to set their own policies regarding requirements for participation in the instructionally embedded window. A total of 5,472 students in 14 states took at least one instructionally embedded testlet during the 2022–2023 academic year.

| State | n |

|---|---|

| Arkansas | 474 |

| Delaware | 53 |

| Iowa | 282 |

| Kansas | 234 |

| Maryland | 15 |

| Missouri | 1,400 |

| New Jersey | 2,731 |

| New Mexico | 26 |

| New York | 47 |

| North Dakota | 9 |

| Oklahoma | 171 |

| Utah | 22 |

| West Virginia | 7 |

| Wisconsin | 1 |

Table 7.5 summarizes the number of instructionally embedded testlets taken in science. Across all states, students took 30,766 science testlets during the instructionally embedded window.

| Grade | n |

|---|---|

| 3 | 915 |

| 4 | 1,098 |

| 5 | 7,963 |

| 6 | 1,345 |

| 7 | 1,343 |

| 8 | 8,489 |

| 9 | 931 |

| 10 | 1,797 |

| 11 | 6,494 |

| 12 | 391 |

| Total | 30,766 |

7.2 Student Performance

Student performance on DLM assessments is interpreted using cut points determined by a standard setting study For a description of the standard setting process used to determine the cut points, see Chapter 6 of the 2015–2016 Technical Manual—Science (Dynamic Learning Maps Consortium, 2017).. Student achievement is described using four performance levels. A student’s performance level is determined by the total number of linkage levels mastered across the assessed Essential Elements (EEs).

For the spring 2023 administration, student performance was reported using the same four performance levels approved by the DLM Governance Board for prior years:

- The student demonstrates Emerging understanding of and ability to apply content knowledge and skills represented by the EEs.

- The student’s understanding of and ability to apply targeted content knowledge and skills represented by the EEs is Approaching the Target.

- The student’s understanding of and ability to apply content knowledge and skills represented by the EEs is At Target. This performance level is considered meeting achievement expectations.

- The student demonstrates Advanced understanding of and ability to apply targeted content knowledge and skills represented by the EEs.

7.2.1 Overall Performance

Table 7.6 reports the percentage of students achieving at each performance level from the spring 2023 administration for science. At the elementary level, the percentage of students who achieved at the At Target or Advanced levels (i.e., proficient) was approximately 15%; in middle school the percentage of students meeting or exceeding At Target expectations was approximately 23%; in high school the percentage was approximately 20%; in end-of-instruction biology the percentage was approximately 17%.

| Grade | n | Emerging (%) | Approaching (%) | At Target (%) | Advanced (%) | At Target + Advanced (%) |

|---|---|---|---|---|---|---|

| 3 | 451 | 64.7 | 16.4 | 9.3 | 9.5 | 18.8 |

| 4 | 3,979 | 64.0 | 18.9 | 13.1 | 3.9 | 17.0 |

| 5 | 7,508 | 67.7 | 18.6 | 13.0 | 0.8 | 13.8 |

| 6 | 894 | 66.6 | 18.0 | 10.2 | 5.3 | 15.4 |

| 7 | 946 | 61.3 | 18.3 | 14.5 | 5.9 | 20.4 |

| 8 | 13,101 | 56.5 | 19.7 | 19.1 | 4.7 | 23.8 |

| 9 | 3,919 | 53.1 | 25.2 | 15.4 | 6.3 | 21.7 |

| 10 | 1,827 | 58.0 | 25.5 | 12.8 | 3.8 | 16.6 |

| 11 | 8,060 | 55.0 | 25.7 | 14.5 | 4.8 | 19.3 |

| 12 | 103 | 50.5 | 27.2 | 11.7 | 10.7 | 22.3 |

| Biology | 1,034 | 65.1 | 17.5 | 12.7 | 4.7 | 17.4 |

7.2.2 Subgroup Performance

Data collection for DLM assessments includes demographic data on gender, race, ethnicity, and English learning status. Table 7.7 summarizes the disaggregated frequency distributions for science performance levels, collapsed across all assessed grade levels. Although state education agencies each have their own rules for minimum student counts needed to support public reporting of results, small counts are not suppressed here because results are aggregated across states and individual students cannot be identified.

|

Emerging

|

Approaching

|

At Target

|

Advanced

|

At Target +

Advanced |

||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Subgroup | n | % | n | % | n | % | n | % | n | % |

| Gender | ||||||||||

| Male | 16,406 | 58.7 | 5,815 | 20.8 | 4,466 | 16.0 | 1,262 | 4.5 | 5,728 | 20.5 |

| Female | 8,365 | 60.4 | 3,043 | 22.0 | 1,950 | 14.1 | 483 | 3.5 | 2,433 | 17.6 |

| Nonbinary/undesignated | 20 | 62.5 | 10 | 31.2 | 2 | 6.2 | 0 | 0.0 | 2 | 6.2 |

| Race | ||||||||||

| White | 14,304 | 57.8 | 5,332 | 21.5 | 3,995 | 16.1 | 1,123 | 4.5 | 5,118 | 20.7 |

| African American | 5,141 | 60.5 | 1,772 | 20.8 | 1,271 | 14.9 | 318 | 3.7 | 1,589 | 18.7 |

| Two or more races | 3,233 | 61.7 | 1,132 | 21.6 | 688 | 13.1 | 190 | 3.6 | 878 | 16.7 |

| Asian | 1,395 | 69.0 | 353 | 17.5 | 230 | 11.4 | 44 | 2.2 | 274 | 13.6 |

| American Indian | 502 | 52.2 | 217 | 22.6 | 183 | 19.0 | 60 | 6.2 | 243 | 25.3 |

| Native Hawaiian or Pacific Islander | 153 | 61.9 | 45 | 18.2 | 41 | 16.6 | 8 | 3.2 | 49 | 19.8 |

| Alaska Native | 63 | 68.5 | 17 | 18.5 | 10 | 10.9 | 2 | 2.2 | 12 | 13.0 |

| Hispanic ethnicity | ||||||||||

| Non-Hispanic | 19,546 | 59.2 | 6,993 | 21.2 | 5,100 | 15.4 | 1,388 | 4.2 | 6,488 | 19.6 |

| Hispanic | 5,245 | 59.6 | 1,875 | 21.3 | 1,318 | 15.0 | 357 | 4.1 | 1,675 | 19.0 |

| English learning (EL) participation | ||||||||||

| Not EL eligible or monitored | 23,362 | 59.4 | 8,328 | 21.2 | 6,038 | 15.3 | 1,624 | 4.1 | 7,662 | 19.5 |

| EL eligible or monitored | 1,429 | 57.9 | 540 | 21.9 | 380 | 15.4 | 121 | 4.9 | 501 | 20.3 |

7.3 Mastery Results

As described above, student performance levels are determined by applying cut points to the total number of linkage levels mastered. This section summarizes student mastery of assessed EEs and linkage levels, including how students demonstrated mastery from among three scoring rules and the highest linkage level students tended to master.

7.3.1 Mastery Status Assignment

As described in Chapter 5 of the 2021–2022 Technical Manual Update—Science (Dynamic Learning Maps Consortium, 2022), student responses to assessment items are used to estimate the posterior probability that the student mastered each of the assessed linkage levels using diagnostic classification modeling. The linkage levels, in order, are: Initial, Precursor, and Target. A student can be a master of zero, one, two, or all three linkage levels, within the order constraints. For example, if a student masters the Precursor level, they also master the Initial linkage level. Students with a posterior probability of mastery greater than or equal to .80 are assigned a linkage level mastery status of 1, or mastered. Students with a posterior probability of mastery less than .80 are assigned a linkage level mastery status of 0, or not mastered. Maximum uncertainty in the mastery status occurs when the probability is .5, and maximum certainty occurs when the probability approaches 0 or 1. In addition to the calculated probability of mastery, students could be assigned mastery of linkage levels within an EE in two other ways: correctly answering 80% of all items administered at the linkage level or through the two-down scoring rule. The two-down scoring rule was implemented to guard against students assessed at the highest linkage levels being overly penalized for incorrect responses. When a student did not demonstrate mastery of the assessed linkage level, mastery was assigned at two linkage levels below the level that was assessed. Theoretical evidence for the use of the two-down rule based on DLM content structures is presented in Chapter 2 of the 2015–2016 Technical Manual—Science (Dynamic Learning Maps Consortium, 2017).

As an example of the two-down scoring rule, take a student who tested only on the Target linkage level of an EE. If the student demonstrated mastery of the Target linkage level, as defined by the .80 posterior probability of mastery cutoff or the 80% correct rule, then all linkage levels below and including the Target level would be categorized as mastered. If the student did not demonstrate mastery on the tested Target linkage level, then mastery would be assigned at two linkage levels below the tested linkage level (i.e., mastery of the Initial), rather than showing no evidence of EE mastery at all.

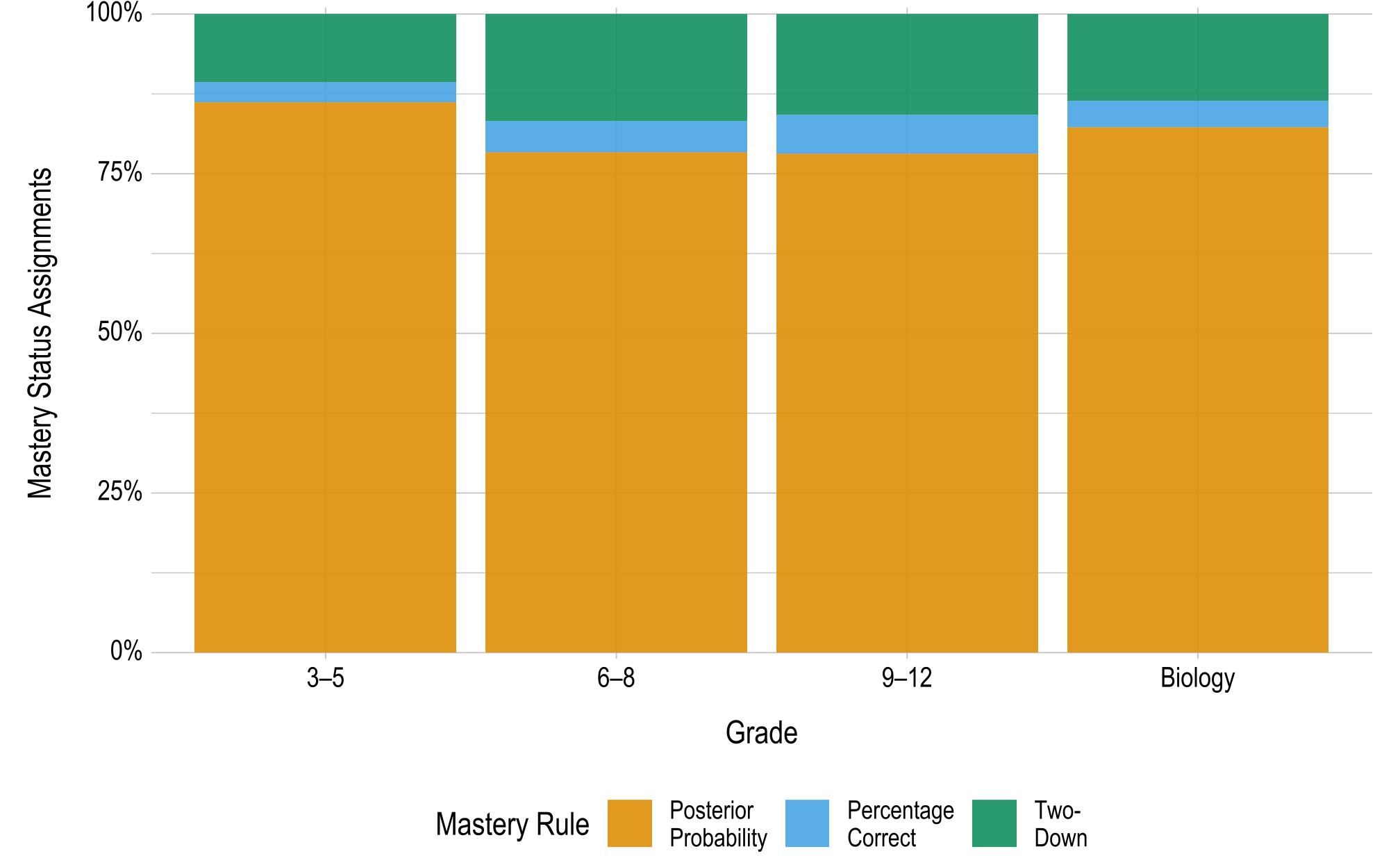

The percentage of mastery statuses obtained by each scoring rule was calculated to evaluate how each mastery assignment rule contributed to students’ linkage level mastery statuses during the 2022–2023 administration of DLM assessments, as shown in Figure 7.1. Posterior probability was given first priority. That is, if scoring rules agreed on the highest linkage level mastered within an EE (i.e., the posterior probability and 80% correct both indicate the Target linkage level as the highest mastered), the mastery status was counted as obtained via the posterior probability. If mastery was not demonstrated by meeting the posterior probability threshold, the 80% scoring rule was imposed, followed by the two-down rule. This means that EEs that were assessed by a student at the lowest two linkage levels (i.e., Initial and Precursor) are never categorized as having mastery assigned by the two-down rule. This is because the student would either master the assessed linkage level and have the EE counted under the posterior probability or 80% correct scoring rule, or all three scoring rules would agree on the score (i.e., no evidence of mastery), in which case preference would be given to the posterior probability. Across grades, approximately 78%–86% of mastered linkage levels were derived from the posterior probability obtained from the modeling procedure. Approximately 3%–6% of linkage levels were assigned mastery status by the percentage correct rule. The remaining 11%–17% of mastered linkage levels were determined by the two-down rule.

Figure 7.1: Linkage Level Mastery Assignment by Mastery Rule for Each Grade Band or Course

Because correct responses to all items measuring the linkage level are often necessary to achieve a posterior probability above the .80 threshold, the percentage correct rule overlaps considerably with the posterior probabilities (but is second in priority). The percentage correct rule did provide mastery status in instances where correctly responding to all or most items still resulted in a posterior probability below the mastery threshold. The agreement between the posterior probability and percentage correct rules was quantified by examining the rate of agreement between the highest linkage level mastered for each EE for each student using each method. For the 2022–2023 operational year, the rate of agreement between the two methods was 86%. When the two methods disagreed, the posterior probability method indicated a higher level of mastery (and therefore was implemented for scoring) in 66% of cases. Thus, in some instances, the posterior probabilities allowed students to demonstrate mastery when the percentage correct was lower than 80% (e.g., a student completed a four-item testlet and answered three of four items correctly).

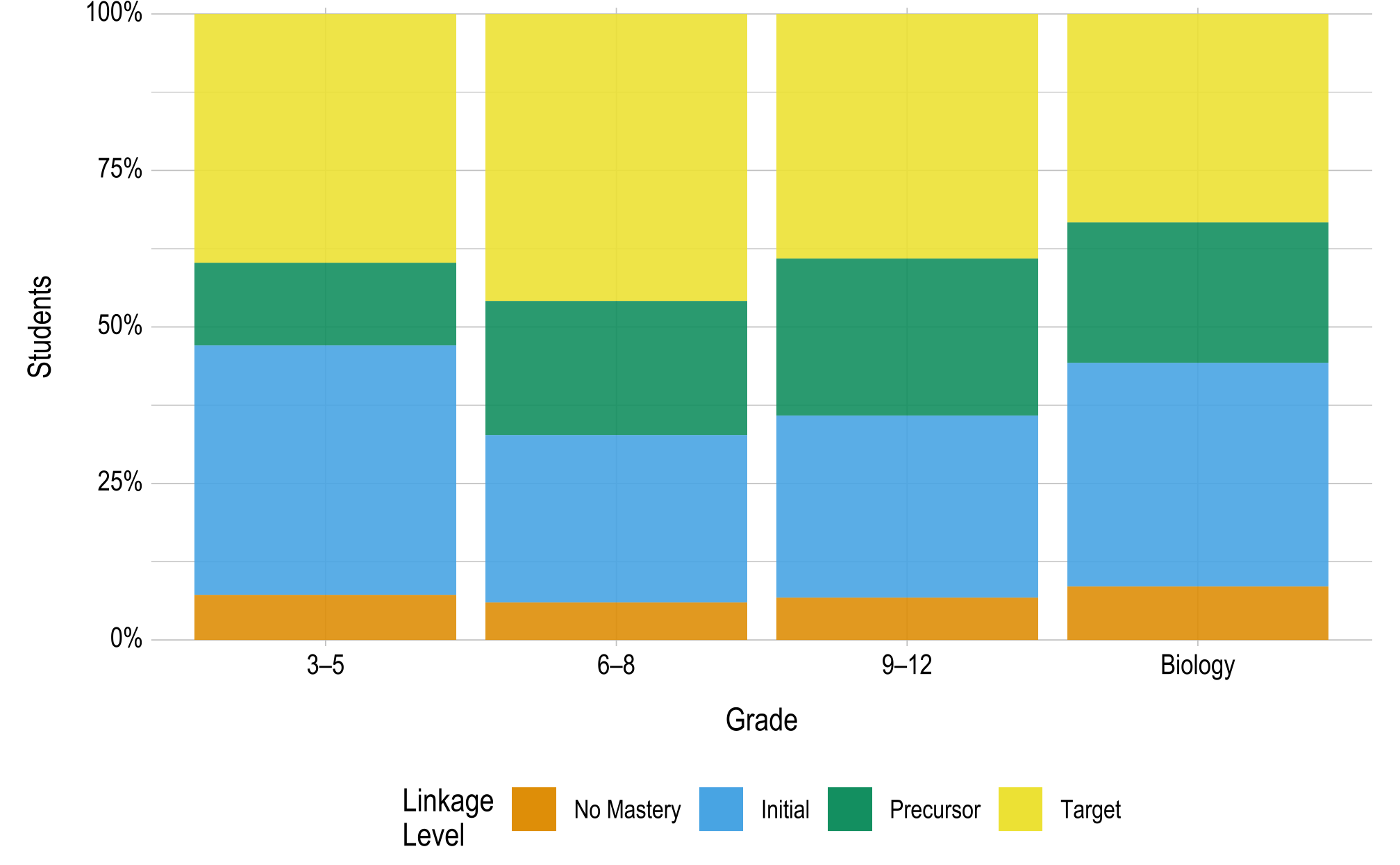

7.3.2 Linkage Level Mastery

Scoring for DLM assessments determines the highest linkage level mastered for each EE. This section summarizes the distribution of students by highest linkage level mastered across all EEs. For each student, the highest linkage level mastered across all tested EEs was calculated. Then, for each grade, the number of students with each linkage level as their highest mastered linkage level across all EEs was summed and then divided by the total number of students who tested in the grade. This resulted in the proportion of students for whom each level was the highest linkage level mastered.

Figure 7.2 displays the percentage of students who mastered each linkage level as the highest linkage level across all assessed EEs in science. For example, across all 3-5 science EEs, the Initial level was the highest level that 40% of students mastered. The percentage of students who mastered as high as the Target linkage level ranged from approximately 33% to 46%.

Figure 7.2: Students’ Highest Linkage Level Mastered Across Science Essential Elements by Grade

7.4 Data Files

DLM assessment results were made available to DLM state education agencies following the spring 2023 administration. Similar to prior years, the General Research File (GRF) contained student results, including each student’s highest linkage level mastered for each EE and final performance level for science for all students who completed any testlets. In addition to the GRF, the states received several supplemental files. Consistent with prior years, the special circumstances file provided information about which students and EEs were affected by extenuating circumstances (e.g., chronic absences), as defined by each state. State education agencies also received a supplemental file to identify exited students. The exited students file included all students who exited at any point during the academic year. In the event of observed incidents during assessment delivery, state education agencies are provided with an incident file describing students impacted.

Consistent with prior delivery cycles, state education agencies were provided with a two-week window following data file delivery to review the files and invalidate student records in the GRF. Decisions about whether to invalidate student records are informed by individual state policy. If changes were made to the GRF, state education agencies submitted final GRFs via Educator Portal. The final GRF was used to generate score reports.

7.5 Score Reports

Assessment results were provided to state education agencies to report to parents/guardians, educators, and local education agencies. Individual Student Score Reports summarized student performance on the assessment. Several aggregated reports were provided to state and local education agencies, including reports for the classroom, school, district, and state.

No changes were made to the structure of individual or aggregated reports during spring 2023. For a complete description of score reports, including aggregated reports, see Chapter 7 of the 2015–2016 Technical Manual—Science (Dynamic Learning Maps Consortium, 2017).

7.5.1 Individual Student Score Reports

Similar to prior years, Individual Student Score Reports included two sections: a Performance Profile section, which describes student performance in the subject overall, and a Learning Profile section, which provides detailed reporting of student mastery of individual skills. Chapter 7 of the 2015–2016 Technical Manual—Science (Dynamic Learning Maps Consortium, 2017) describes evidence related to the development, interpretation, and use of Individual Student Score Reports and contains sample pages of the Performance Profile and Learning Profile.

7.6 Quality-Control Procedures for Data Files and Score Reports

No changes were made to the quality-control procedures for data files and score reports for 2022–2023. For a complete description of quality-control procedures, see Chapter 7 of the 2015–2016 Technical Manual—Science (Dynamic Learning Maps Consortium, 2017).

7.7 Conclusion

Results for DLM assessments include students’ overall performance levels and mastery decisions for each assessed EE and linkage level. During spring 2023, assessments were administered to 41,822 students in twenty Science states. Between 14% and 24% of students achieved at the At Target or Advanced levels across all grades. Of the three scoring rules, linkage level mastery status was most frequently assigned by the posterior probability of mastery.

Lastly, following the spring 2023 administration, four data files were delivered to state education agencies: the GRF, the special circumstance code file, the exited students file, and an incident file. No changes were made to the structure of data files, score reports, or quality-control procedures during 2022–2023.